It was a pleasure to speak at the AWS/CSU Research in the Cloud series. By nature I am not a strong promoter of any technology, and the browser, OS or editor “wars” frankly bore me; I sometimes use a “lesser” technology because it happens to be more convenient, or because I don’t have the time to learn a “better” technology, or many other good reasons.

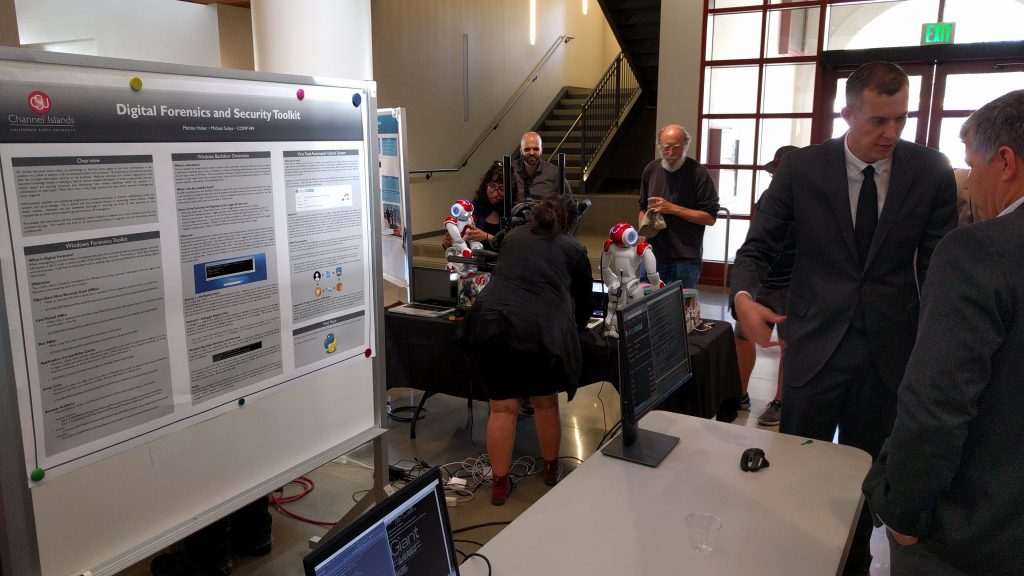

However, as a researcher and teacher I am absolutely thrilled with what AWS has to offer. I regularly give tours of our computer labs at CSU CI (to local companies, prospective graduate students, CSU trustees, fundraising prospects, etc.), and I explain that three things make it possible for a relatively small and unknown campus like ours to compete in scientific & engineering output in the national and international arena:

- How cheap embedded systems have become; a Google Raspberry Pi is $35, and it comes with Linux and GPIO that makes it into a universal controller.

- How cheap 3D printing has become, and in turn this frees us to some extent from having to build an expensive manufacturing lab.

- And AWS: Amazon Cloud Computing Services. Instead of buying, maintaining, cooling and powering expensive servers, we can immediately utilize the required services, and pay as we go. This works very well for a university because we do not have to make up-front capital investments, and our usage is not always the same (e.g., practically no classes in the summer).

Material related to the talk

- Examples of AWS related projects that my students and I have undertaken over the last year: http://prof.msoltys.com/?tag=aws.

- AWS presentation slides.

- Video of the presentation (my talk start at about 12min)