Google Street View offers panoramic views of more or less any city street in much of the developed world, as well as views along countless footpaths, inside shopping malls, and around museums and art galleries. It is an extraordinary feat of modern engineering that is changing the way we think about the world around us.

Google Street View offers panoramic views of more or less any city street in much of the developed world, as well as views along countless footpaths, inside shopping malls, and around museums and art galleries. It is an extraordinary feat of modern engineering that is changing the way we think about the world around us.

But while Street View can show us what distant places look like, it does not show what the process of traveling or exploring would be like. It’s easy to come up with a fix: simply play a sequence of Street View Images one after the other to create a movie.

But that doesn’t work as well as you might imagine. Running these images at 25 frames per second or thereabouts makes the scenery run ridiculously quickly. That may be acceptable when the scenery does not change, perhaps along freeways and motorways or through unchanging landscapes. But it is entirely unacceptable for busy street views or inside an art gallery.

So Google has come up with a solution: add additional frames between the ones recorded by the Street View cameras. But what should these frames look like?

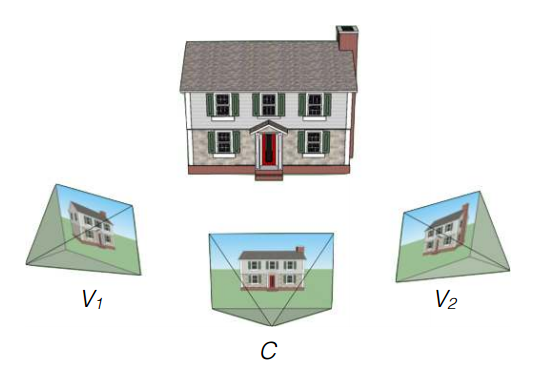

Today, John Flynn and buddies at Google reveal how they have used the company’s vast machine learning know-how to work out what these missing frames should look like, just be studying the frames on either side. The result is a computational movie machine that can turn more or less any sequence of images into smooth running film by interpolating the missing the frames.

Source: Google’s Deep Learning Machine Learns to Synthesize Real World Images | MIT Technology Review